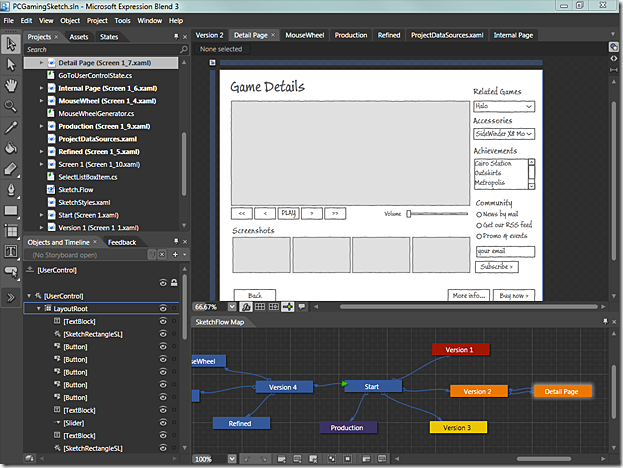

Today I attended a session on a product called SketchFlow, part of Expression Blend 3, which for us Microsoft Gold Partners is part of Expression Studio 3 on MSDN. SketchFlow is a tool that uses WPF and helps you quickly prototype, gather feedback, and document for projects that will use WPF or Silverlight as the UI. Prototyping, in general, helps you do design up front leading to more accurate estimations, share ideas quickly, get feedback quickly, and fail faster when you are going down the wrong path.

We recently used a tool from Balsamiq to produce mockups for a new project, which was very helpful in crystallizing design and gathering feedback from our client, as well as providing our UI developers with a starting point.

I’ve no doubt that SketchFlow is going to be our new prototyping tool – not only does it allow us to do all of the above, but it actually creates an application in WPF.

One of the great features of SketchFlow is that you are using real WPF and the prototype is a real application and not just screen shots. There all features built right in to allow folks to provide real feedback directly in the prototype, and ways for you to collect and review that feedback.

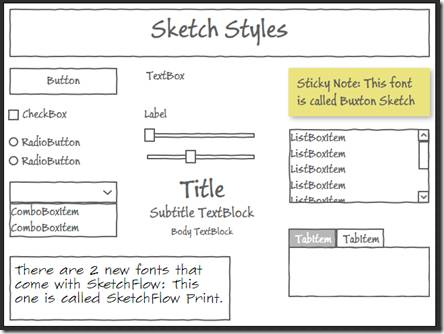

SketchFlow allows to use Sketch styles to give your prototype a “draft,” or work-in-progress, look and feel – this is actually important to get the right feedback from people at a higher level instead of letting people get bogged down in details like button size and color, etc.

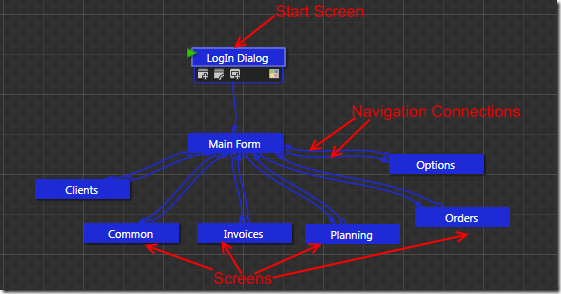

In SketchFlow you create a high level map of the forms/windows that you are going to use in your application and then connect them together to create a flow. Then you can select any of those to mock up a particular form.

You can also quickly add sample data to your application by defining data and filling it in right from within the application, or by connecting it to a database. You can drag and drop to create lists using your sample data, and manipulate the controls for how the data is displayed right in SketchFlow. You can put C# code behind various actions and events in the prototype as well, to simulate various aspects of the application you are prototyping, including storing global state information to keep track of from one screen to another.

Once again SketchFlow uses WPF, so you can easily use it as the basis for a WPF application, and with some limitations you can use it for Silverlight as well. Although you can certainly use it to prototype other application types such as web sites, it will not generate ASP.NET etc. – the output is WPF or Silverlight only.

SketchFlow also has a cool feature to allow you to export your prototype to Microsoft Word, creating a table of contents and including all of the screen shots – this will certainly help create documentation much more quickly (we copied and pasted about 40 images in a recent specification document, this will automate that task in future specs for us in 1 click!).

After attending the SketchFlow session yesterday I immediately installed Expression Studio 3, and I am getting ready to use this with our designer for prototyping the front end of new project I have coming up.